5 ways to deploy your own large language model

The only difference is that it consists of an additional RLHF (Reinforcement Learning from Human Feedback) step aside from pre-training and supervised fine-tuning. During the pre-training phase, LLMs are trained to forecast the next token in the text. The attention mechanism in the Large Language Model allows one to focus on a single element of the input text to validate its relevance to the task at hand. Plus, these layers enable the model to create the most precise outputs. So, let’s take a deep dive into the world of large language models and explore what makes them so powerful. Well, LLMs are incredibly useful for untold applications, and by building one from scratch, you understand the underlying ML techniques and can customize LLM to your specific needs.

Tools like derwiki/llm-prompt-injection-filtering and laiyer-ai/llm-guard are in their early stages but working toward preventing this problem. Input enrichment tools aim to contextualize and package the user’s query in a way that will generate the most useful response from the LLM. These evaluations are considered “online” because they assess the LLM’s performance during user interaction. In-context learning can be done in a variety of ways, like providing examples, rephrasing your queries, and adding a sentence that states your goal at a high-level.

Data preparation

Enterprises must balance this tradeoff to suit their needs to the best and extract ROI from their LLM initiative. Building an enterprise-specific custom LLM empowers businesses to unlock a multitude of tailored opportunities, perfectly suited to their unique requirements, industry dynamics, and customer base. There is also RLAIF (Reinforcement Learning with AI Feedback) which can be used in place of RLHF. The main difference here is instead of the human feedback an AI model serves as the evaluator or critic, providing feedback to the AI agent during the reinforcement learning process. However, the decision to embark on building an LLM should be reviewed carefully. It requires significant resources, both in terms of computational power and data availability.

The Challenges, Costs, and Considerations of Building or Fine-Tuning an LLM – hackernoon.com

The Challenges, Costs, and Considerations of Building or Fine-Tuning an LLM.

Posted: Fri, 01 Sep 2023 07:00:00 GMT [source]

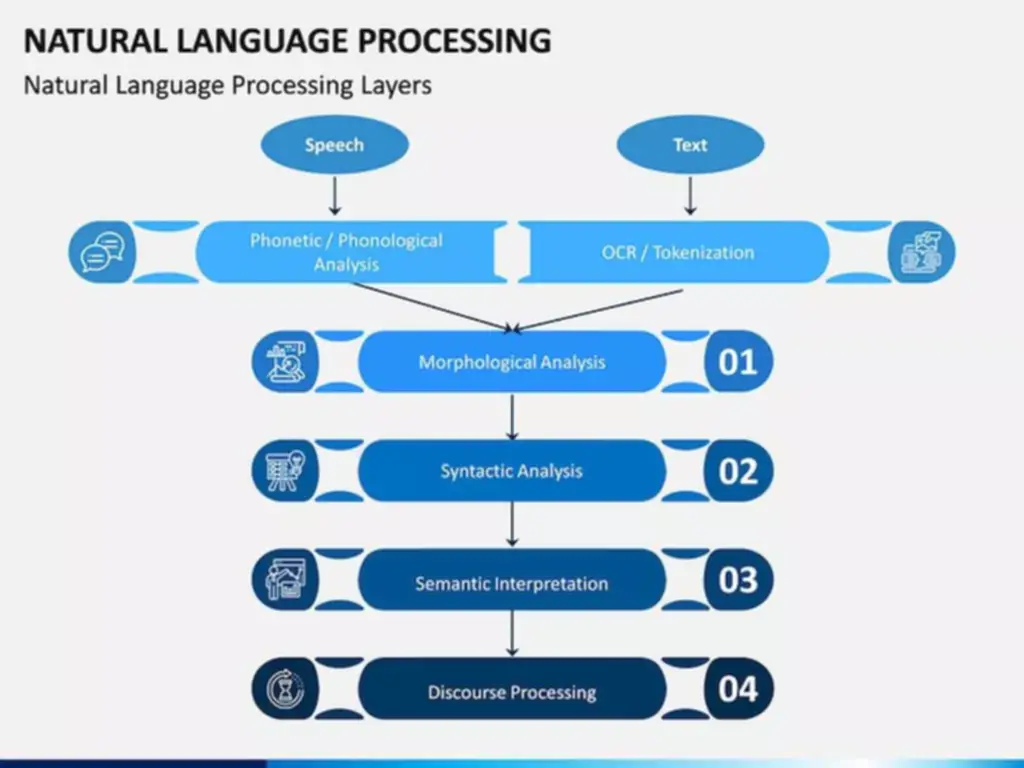

These models have varying levels of complexity and performance and have been used in a variety of natural language processing and natural language generation tasks. During the pre-training phase, LLMs are trained to predict the next token in the text. The history of Large Language Models can be traced back to the 1960s when the first steps were taken in natural language processing (NLP). In 1967, a professor at MIT developed Eliza, the first-ever NLP program.

Misinformation and Fake Content

Large Language Models (LLMs) and Foundation Models (FMs) have demonstrated remarkable capabilities in a wide range of Natural Language Processing (NLP) tasks. They have been used for tasks such as language translation, text summarization, question-answering, sentiment analysis, and more. An intuition would be that these preference models need to have a similar capacity to understand the text given to them as a model would need in order to generate said text. Custom large language models offer unparalleled customization, control, and accuracy for specific domains, use cases, and enterprise requirements. Thus enterprises should look to build their own enterprise-specific custom large language model, to unlock a world of possibilities tailored specifically to their needs, industry, and customer base. Fine-tuning can result in a highly customized LLM that excels at a specific task, but it uses supervised learning, which requires time-intensive labeling.

It’s built on top of the Boundary Forest algorithm, says co-founder and co-CEO Devavrat Shah. And in a July report from Netskope Threat Labs, source code is posted to ChatGPT more than any other type of sensitive data at a rate of 158 incidents per 10,000 enterprise users per month. You can have an overview of all the LLMs at the Hugging Face Open LLM Leaderboard. Primarily, there is a defined process followed by the researchers while creating LLMs.

The 40-hour LLM application roadmap: Learn to build your own LLM applications from scratch

Building quick iteration cycles into the product development process allows teams to fail and learn fast. At GitHub, the main mechanism for us to quickly iterate is an A/B experimental platform. This includes tasks such as monitoring the performance of LLMs, detecting and correcting errors, and upgrading Large Language Models to new versions.

- This is particularly useful for tasks that involve understanding long-range dependencies between tokens, such as natural language understanding or text generation.

- These models are pretrained on large-scale datasets and are capable of generating coherent and contextually relevant text.

- From there, they make adjustments to both the model architecture and hyperparameters to develop a state-of-the-art LLM.

- In marketing, generative AI is being used to create personalized advertising campaigns and to generate product descriptions.

- It is built upon PaLM, a 540 billion parameters language model demonstrating exceptional performance in complex tasks.

- To minimize this impact, energy-efficient training methods should be explored.

A vector database is a way of organizing information in a series of lists, each one sorted by a different attribute. For example, you might have a list that’s alphabetical, and the closer your responses are in alphabetical order, the more relevant they are. EleutherAI launched a framework termed Language Model Evaluation Harness to compare and evaluate LLM’s performance.

How to train an open-source foundation model into a domain-specific LLM?

It is instrumental when you can’t curate sufficient datasets to fine-tune a model. When performing transfer learning, ML engineers freeze the model’s existing layers and append new trainable ones to the top. If you opt for this approach, be mindful of the enormous computational resources the process demands, data quality, and the expensive cost. Training a model scratch is resource attentive, so it’s crucial to curate and prepare high-quality training samples. As Gideon Mann, Head of Bloomberg’s ML Product and Research team, stressed, dataset quality directly impacts the model performance.

In today’s business world, Generative AI is being used in a variety of industries, such as healthcare, marketing, and entertainment. Choosing the appropriate dataset for pretraining is critical as it affects the model’s ability to generalize and comprehend a variety of linguistic structures. A comprehensive and varied dataset aids in capturing a broader range of language patterns, resulting in a more effective language model. To enhance performance, it is essential to verify if the dataset represents the intended domain, contains different genres and topics, and is diverse enough to capture the nuances of language. Foundation Models serve as the building blocks for LLMs and form the basis for fine-tuning and specialization. These models are pretrained on large-scale datasets and are capable of generating coherent and contextually relevant text.

By open-sourcing your models, you can contribute to the broader developer community. Developers can use open-source models to build new applications, products and services or as a starting point for their own custom models. This collaboration can lead to faster innovation and a wider range of AI applications. At its core, an LLM is a transformer-based neural network introduced in 2017 by Google engineers in an article titled “Attention is All You Need”. The goal of the model is to predict the text that is likely to come next.

Datasaur Launches LLM Lab to Build and Train Custom ChatGPT and Similar Models – Datanami

Datasaur Launches LLM Lab to Build and Train Custom ChatGPT and Similar Models.

Posted: Fri, 27 Oct 2023 07:00:00 GMT [source]

With insights into batch size hyperparameters and a thorough overview of the PyTorch framework, you’ll switch between CPU and GPU processing for optimal performance. Concepts such as embedding vectors, dot products, and matrix multiplication lay the groundwork for more advanced topics. You can train a foundational model entirely from a blank slate with industry-specific knowledge.

Service

The attention mechanism is used in a variety of LLM applications, such as machine translation, question answering, and text summarization. For example, in machine translation, the attention mechanism is used to allow LLMs to focus on the most how to build your own llm important parts of the source text when generating the translated text. For example, Transformer-based models are being used to develop new machine translation models that can translate text between languages more accurately than ever before.

Whether training a model from scratch or fine-tuning one, ML teams must clean and ensure datasets are free from noise, inconsistencies, and duplicates. The first technical decision you need to make is selecting the architecture for your private LLM. Options include fine-tuning pre-trained models, starting from scratch, or utilizing open-source models like GPT-2 as a base. The choice will depend on your technical expertise and the resources at your disposal.

Architectural decisions play a significant role in determining factors such as the number of layers, attention mechanisms, and model size. These decisions are essential in developing high-performing models that can accurately perform natural language processing tasks. Language models have gained significant attention in recent years, revolutionizing various fields such as natural language processing, content generation, and virtual assistants. One of the most prominent examples is OpenAI’s ChatGPT, a large language model that can generate human-like text and engage in interactive conversations. This has sparked the curiosity of enterprises, leading them to explore the idea of building their own large language models (LLMs). The training corpus used for Dolly consists of a diverse range of texts, including web pages, books, scientific articles and other sources.

Developed by Kasisto, the model enables transparent, safe, and accurate use of generative AI models when servicing banking customers. Training a private LLM requires substantial computational resources and expertise. Depending on the size of your dataset and the complexity of your model, this process can take several days or even weeks. Cloud-based solutions and high-performance GPUs are often used to accelerate training.

In this article, we will walk you through the basic steps to create an LLM model from the ground up. Large language models (LLMs) are one of the most exciting developments in artificial intelligence. They have the potential to revolutionize a wide range of industries, from healthcare to customer service to education. But in order to realize this potential, we need more people who know how to build and deploy LLM applications.